Biography

I’m a graduate student pursuing MASc in AI (research) at Queen’s University. My research interests include Generative models, Self-supervised learning, Multi-modality, and learning universal representations of time-series. I’m an author of 10+ publications, encompassing a total of 100+ citations. Apart from research, I have prior experience in Data engineering and deploying Machine learning models at scale on cloud/serverless to integrate with real-world products/applications.

Achievements:

- Vector Scholarship in AI

- MITACS Globalink Graduate Fellow

-

MASc in Artificial Intelligence, September 2022 to Present

Queen's University

-

B.Tech in Electronics and Telecommunication, July 2018 to July 2022

KIIT University

Experience

Overview:

- Graduate research supervised by Dr. Ali Etemad.

- Implemented a state-of-the-art High-Fidelity PPG-to-ECG translation system powered by a novel class of Diffusion Models. Demonstrated the ability to detect a range of Cardiac conditions/diseases using synthetic ECGs with significantly higher F1 than the input PPGs. Paper under review at an A* conference.

- Developed a Speech Emotion classifier capable of explicitly understanding the linguistic and prosodic aspect of emotions using Cross-modal Knowledge distillation. Experiments show state-of-the-art performance on IEMOCAP. Paper to be submitted at an A* conference.

Overview:

- Presently working on few-shot prompt learning on spoken content, for SHL’s Interview Intelligence platform.

- Developed algorithms for repititive phrase, filler phrase, self introduction and organization introduction detection.

Overview:

- Collaborated with a PhD student for a project focused on modeling continuous conformational changes in cryo-ET images with Unsupervised representation learning under the supervision of Dr. Min Xu.

- Conducted a comprehensive literature review and baseline method implementations.

Overview:

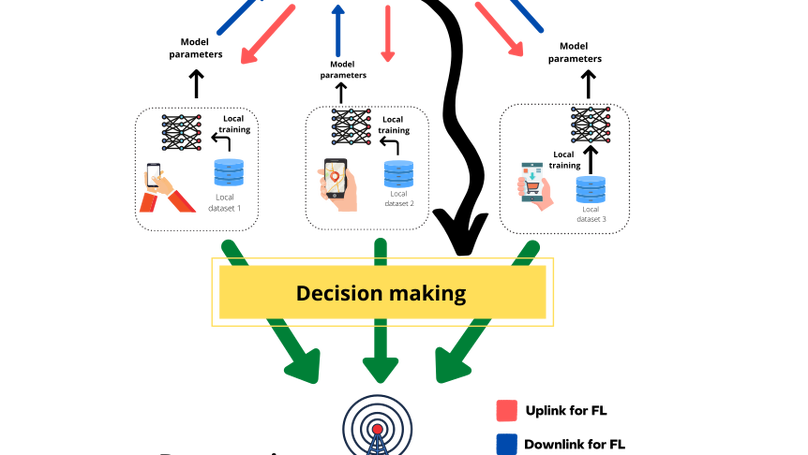

- Conducted cross-disciplinary research at the intersection of Deep learning and wireless communications, under the supervision of Dr. Omer Waqar from Thompson River’s University, Canada.

- Authored a comprehensive review paper addressing a gap in literature on the bi-directional interplay of Federated learning and wireless communications, accepted at the journal - Transactions on Emerging Telecommunications Technologies.

- Designed a novel unsupervised learning algorithm for energy and power optimization in UAV networks. The paper was presented at IEEE UEMCON 2021, and recieved the Best Presenter award.

Overview:

- Leading the project on variable-length synthetic handwriting image generation using Generative Adversarial networks.

- Academia-Industry colloraboration with a 6-member team consisting of myself, another student, Prof. Vimal Srivastava, Prof. Manoranjan Kumar and two mentors from Samsung Research, Bangalore.

- Generated synthetic data is being utilized to train better handwritten text recognition (HTR) models for HTR feature in Samsung smartphone’s OCR system.

Overview:

- Focused on building full stack data science pipeline from data collection to model deployment for powering the AI engine of Relevense (https://www.relevense.com/), a Flagship market intelligence product co-funded with grants of the Europees Fonds voor Regionale Ontwikkeling (EFRO) and Samenwerkingsverband Noord Nederland (SNN).

- Projects include Tweet based emotion recognition API, Big-5 personality classication API, Facial expression recognition, Receptive audience recommendation system.

- Reduced the AWS costs by 60% by shifting the backend to a serverless architecture with multiple Lambda functions, DynamoDB, Timestream and S3.

- Single handedly created end-to-end ML pipelines with all models beyond 95% accuracy along with efficient monitoring of out of training distribution inference events.

Overview:

- Collaborated with a team of 48 while working with our client, World Resources Institute (https://www.wri.org/) on a project leveraging NLP to find geographical locations with climate hazards and potential gaps for minimizing climate change impacts across the globe. Deployed a dashboard designed with Streamlit for easy inference. Technical blog on the project: https://omdena.com/blog/climate-change-impacts/ .

- Led a team of 48 for building the data processing and machine learning backend for a Dutch client’s market intelligence product. Got a full-time offer from the client due to extraordinary contributions in the project.

Overview:

- Built an end-to-end NLP pipeline for Multi-document abstractive summarization of Radiology reports of COVID-19 patients

- Trained longformer and BERT models on a Slurm multi-GPU cluster in an HIPAA protected server.

- Achieved a ROUGE-1 score of 0.410 on test dataset.

Overview:

- Developed a full stack web-based invoice management application following an end-to-end Data science product development lifecycle guided by mentors.

- Responsibilities included identifying appropriate user requirements, designing a great user experience and building appropriate data pipelines and machine learning models along with relevant UI components and backend design.

- Developed a state-of-the-art payment prediction system using XGboost regression, with a root-mean-squared error of 0.1 on 5-fold cross validation.

Recent Publications

Featured Publications

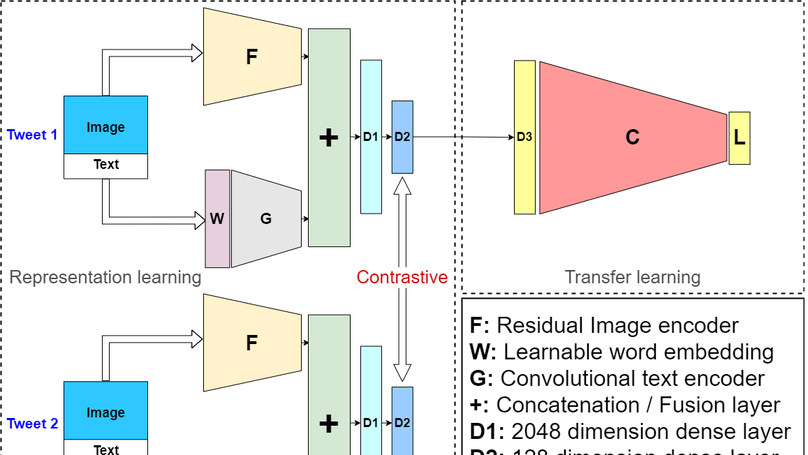

Hateful or offensive content has been increasingly common on social media platforms in recent years, and the problem is now widespread. There is a pressing need for effective automatic solutions for detecting such content, especially due to the gigantic size of social media data. Although significant progress has been made in the automated identification of offensive content, most of the focus has been on only using textual information. It can be easily noticed that with the rise in visual information shared on these platforms, it is quite common to have hateful content on images rather than in the associated text. Due to this, present day unimodal text-based methods won’t be able to cope up with the multimodal hateful content. In this paper, we propose a novel multimodal neural network powered by contrastive learning for identifying offensive posts on social media utilizing both visual and textual information. We design the text and visual encoders with a lightweight architecture to make the solution efficient for real world use. Evaluation on the MMHS150K dataset shows state-of-the-art performance of 82.6 percent test accuracy, making an improvement of approximately +14.1 percent accuracy over the previous best performing benchmark model on the dataset.

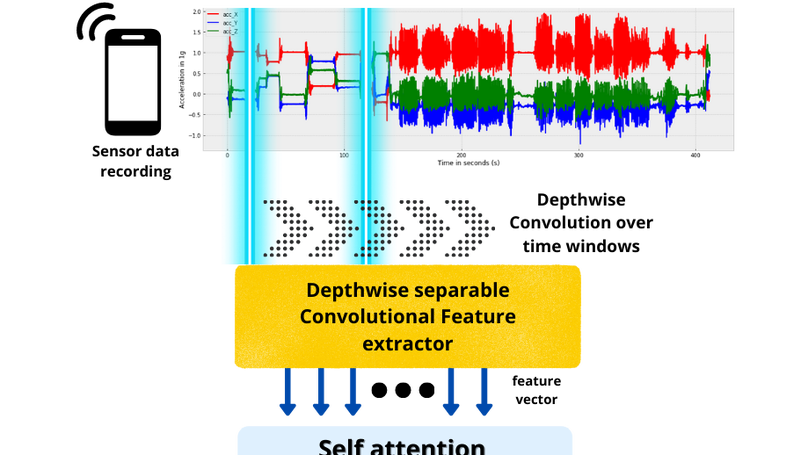

In recent times, surge in the use of smartphones in our daily lives has created a huge opportunity for paving the road towards human-centric computing by utilizing the rich data which gets recorded by it’s multiple sensors. Sensor-based human activity recognition has a tremendous amount of real-world applications such as health monitoring, surveillance, smart homes, and ambient assisted living. This paper presents a joint residual feature extractor and a transformer-based deep neural network for end-to-end human activity recognition using raw multi-sensor data captured from smartphones or wearable devices. Unlike conventional handcrafted feature extraction, this approach outperforms all present approaches showing state-of-the-art generalizable performance over multiple benchmark datasets. It achieves a test accuracy of 95.2% on the UCI HAR dataset and 96.4% test accuracy on the WISDM dataset.

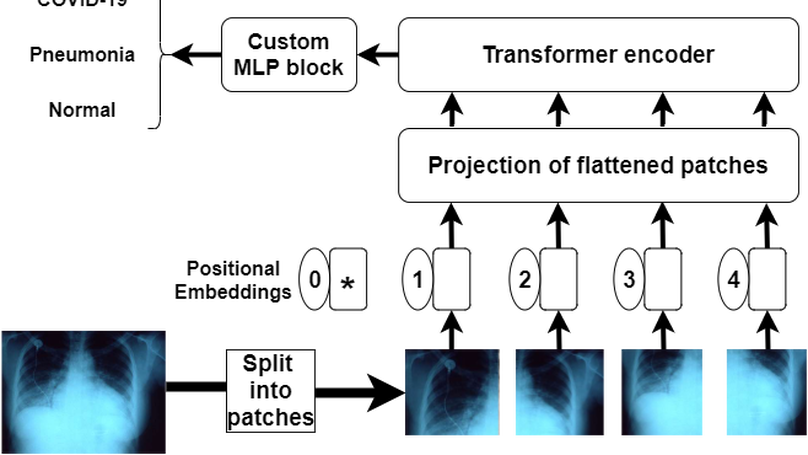

In the recent pandemic, accurate and rapid testing of patients remained a critical task in the diagnosis and control of COVID-19 disease spread in the healthcare industry. Because of the sudden increase in cases, most countries have faced scarcity and a low rate of testing. Chest x-rays have been shown in the literature to be a potential source of testing for COVID-19 patients, but manually checking x-ray reports is time-consuming and error-prone. Considering these limitations and the advancements in data science, we proposed a Vision Transformer based deep learning pipeline for COVID-19 detection from chest x-ray based imaging. Due to the lack of large data sets, we collected data from three open-source data sets of chest x-ray images and aggregated them to form a 30K image data set, which is the largest publicly available collection of chest x-ray images in this domain to our knowledge. Our proposed transformer model effectively differentiates COVID-19 from normal chest x-rays with an accuracy of 98 % along with an AUC score of 99 % in the binary classification task. It distinguishes COVID-19, normal, and pneumonia patient’s x-rays with an accuracy of 92 % and AUC score of 98 % in the Multi-class classification task. For evaluation on our data set, we fine-tuned some of the widely used models in literature namely EfficientNetB0, InceptionV3, Resnet50, MobileNetV3, Xception, and DenseNet-121 as baselines. Our proposed transformer model outperformed them in terms of all metrics. In addition, a Grad-CAM based visualization is created which makes our approach interpretable by radiologists and can be used to monitor the progression of the disease in the affected lungs, assisting healthcare.

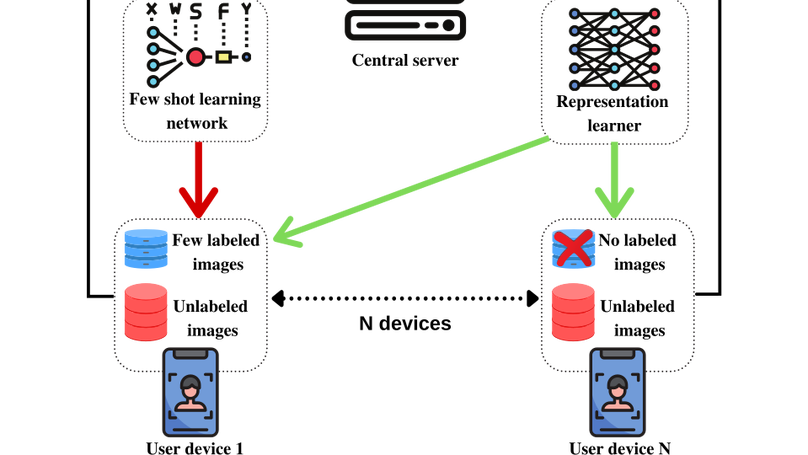

Annotation of large-scale facial expression datasets in the real world is a major challenge because of privacy concerns of the individuals due to which traditional supervised learning approaches won’t scale. Moreover, training models on large curated datasets often leads to dataset bias which reduces generalizability for real world use. Federated learning is a recent paradigm for training models collaboratively with decentralized private data on user devices. In this paper, we propose a few-shot federated learning framework which utilizes few samples of labeled private facial expression data to train local models in each training round and aggregates all the local model weights in the central server to get a globally optimal model. In addition, as the user devices are a large source of unlabeled data, we design a federated learning based self-supervised method to disjointly update the feature extractor network on unlabeled private facial data in order to learn robust and diverse face representations. Experimental results by testing the globally trained model on benchmark datasets (FER-2013 and FERG) show comparable performance with state of the art centralized approaches. To the best of author’s knowledge, this is the first work on few-shot federated learning for facial expression recognition.

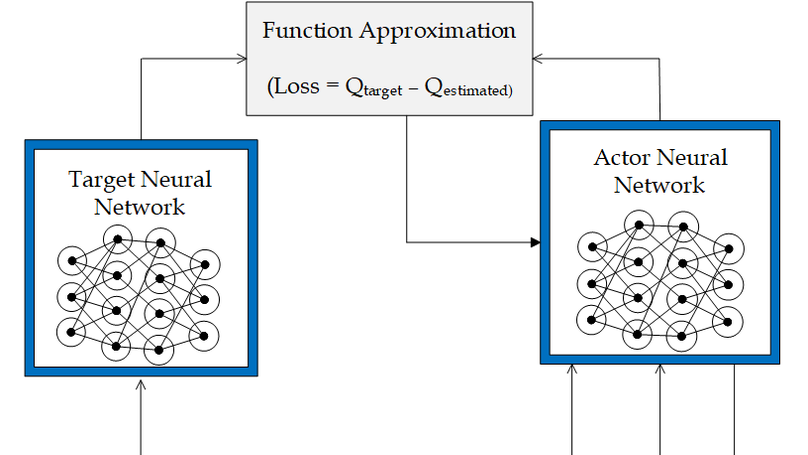

We consider a system model in which several energy harvesting (EH) unmanned aerial vehicles (UAVs), often known as drones, are deployed with device-to-device (D2D) communication networks. For the considered system model, we formulate an optimization problem that aims to find an optimal transmit power vector which maximizes the sum rate of the D2D network while also meets the minimum energy requirements of the UAVs. Because of the nature of the system model, it is necessary to deliver solutions in real time i.e., within a channel coherence time. As a result, conventional non-data-driven optimization methods are inapplicable, as either their run-time overheads are prohibitively expensive or their solutions are significantly suboptimal. In this paper, we address this problem by proposing a deep unsupervised learning (DUL) based hybrid scheme in which a deep neural network (DNN) is complemented by the full power scheme. It is shown through simulations that our proposed hybrid scheme provides up to 91% higher sum rate than an existing fully non-data driven scheme and our scheme is able to obtain solutions quite efficiently, i.e., within a channel coherence time.

In order to meet the extremely heterogeneous requirements of the next generation wireless communication networks, research community is increasingly dependent on using machine learning solutions for real-time decision-making and radio resource management. Traditional machine learning employs fully centralized architecture in which the entire training data is collected at one node e.g., cloud server, that significantly increases the communication overheads and also raises severe privacy concerns. Towards this end, a distributed machine learning paradigm termed as Federated learning (FL) has been proposed recently. In FL, each participating edge device trains its local model by using its own training data. Then, via the wireless channels the weights or parameters of the locally trained models are sent to the central PS, that aggregates them and updates the global model. On one hand, FL plays an important role for optimizing the resources of wireless communication networks, on the other hand, wireless communications is crucial for FL. Thus, a `bidirectional' relationship exists between FL and wireless communications. Although FL is an emerging concept, many publications have already been published in the domain of FL and its applications for next generation wireless networks. Nevertheless, we noticed that none of the works have highlighted the bidirectional relationship between FL and wireless communications. Therefore, the purpose of this survey paper is to bridge this gap in literature by providing a timely and comprehensive discussion on the interdependency between FL and wireless communications.

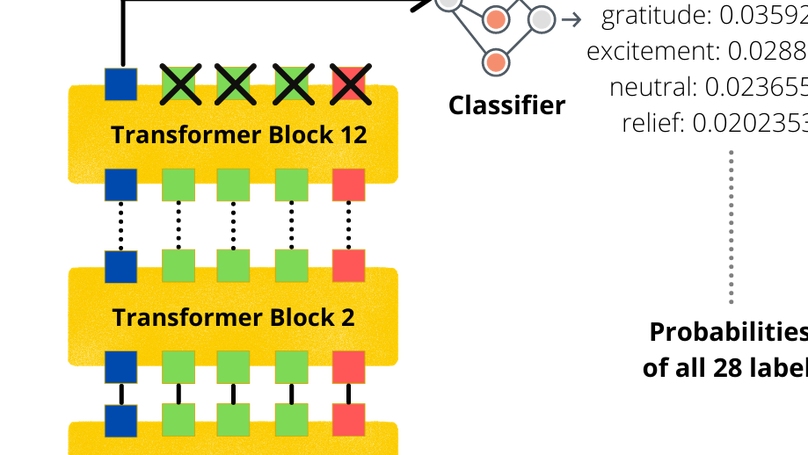

Emotion recognition is a critical sub-field within Affective Computing and a step towards comprehensive natural language understanding. This might pave the way for a wide range of applications in natural language processing for social good, such as suicide prevention and employee mood detection as well as helping businesses provide personalized services to their users. Due to a scarcity of resources and a lack of standard labeled textual corpora for emotions in Hindi, constructing an emotion analysis system is a tough task in such a low resource language. This paper presents a novel multilabel Hindi text dataset of 58000 text samples with 28 emotion labels and a finetuned Multilingual BERT-based transformer model on the finegrained dataset. The model achieves state of the art performance with an overall ROC-AUC score of 0.92 upon evaluation.

5G wireless networks use the network slicing technique that provides a suitable network to a service requirement raised by a network user. Further, the network performs effective slice management to improve the throughput and massive connectivity along with the required latency towards an appropriate resource allocation to these slices for service requirements. This paper presents an online Deep Q-learning based network slicing technique that considers a sigmoid transformed Quality of Experience, price satisfaction, and spectral efficiency as the reward function for bandwidth allocation and slice selection to serve the network users. The Next Generation Mobile Network (NGMN) vertical use cases have been considered for the simulations which also deals with the problem of international roaming and diverse intra-use case requirement variations by using only three standard network service slices termed as enhanced Mobile Broadband (eMBB), Ultra Reliable Low Latency Communication (uRLLC), and massive Machine Type Communication (mMTC). Our Deep Q-Learning model also converges significantly faster than the conventional Deep Q-Learning based approaches used in this field. The environment has been prepared based on ITU specifications for eMBB, uRLLC, mMTC. Our proposed method demonstrates a superior Quality-of-experience for the different users and the higher network bandwidth efficiency compared to the conventional slicing technique.

Certifications

Projects

Coming soon, website under construction !

Recent & Upcoming Talks

Coming soon, website under construction !

Recent Posts

Coming soon, blog under construction!

Contact

- debadityashome9@gmail.com

- 918927630639

- Park Plaza, Kharagpur, West Bengal 721301

- Enter Building and take the stairs to Floor 2